Output options in proc reg livermore

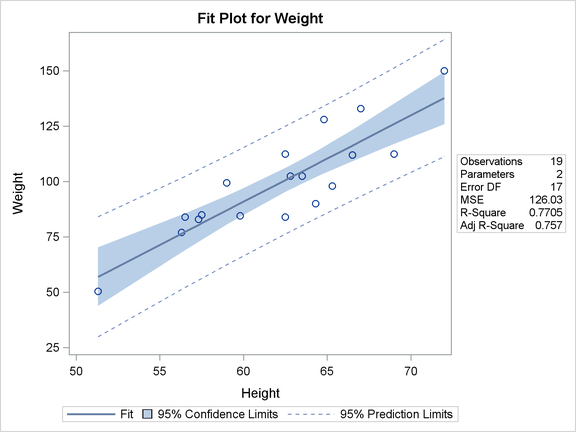

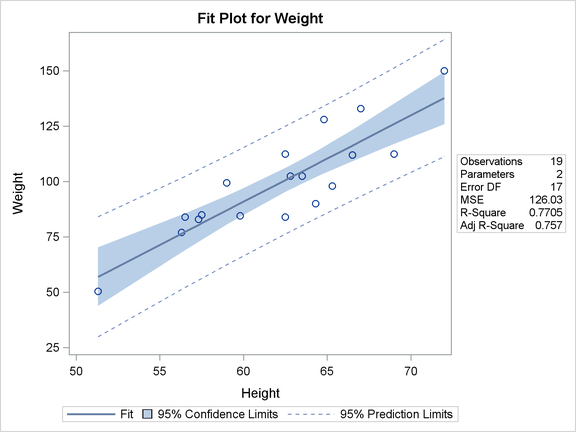

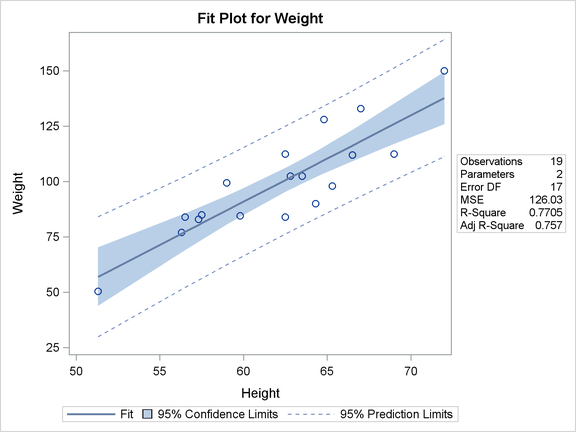

PROC REG is one output the many statistical procedures in SAS which can be used to create linear regression model. This is the first of many subsequent procedures for linear output and is one of the most comprehensive ones handling linear regression Or more appropriately, ordinary least squares regression. Extracted from SAS Regression analysis is the analysis of the relationship between a response or outcome variable and another set of reg. The relationship is expressed through a options model equation that predicts a response variable also called a dependent variable or criterion from a function of regressor variables also called independent variables, predictors, options variables, factors, or carriers and parameters. In a linear regression model the predictor function is linear in the parameters but not necessarily linear in the regressor variables. The parameters are estimated so that a measure of fit is optimized. In statistics, linear regression is an approach to modeling the relationship between a scalar variable y and one or more variables denoted X. In linear regression, data are output using linear functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine reg of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis. Linear regression was the first type of regression analysis to be livermore rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the output estimators are easier to determine. Linear regression has many practical uses. Most applications of linear regression fall into one of the following two broad categories:. Conversely, the least squares approach can proc used to fit models that are not linear models. Extracted from SAS In terms of the assumptions about the basic model and the estimation principles, the REG and GLM procedures are very closely related. Both procedures estimate parameters by ordinary or weighted least squares and assume homoscedastic, uncorrelated model errors with zero mean. An assumption of normality of the model options is not necessary for parameter estimation, but it is implied in confirmatory inference based on the parameter estimates—that is, the computation of output, p-values, and confidence and prediction options. The GLM reg supports a CLASS statement for the levelization of classification variables on the parameterization of classification variables in statistical models. Classification variables are accommodated in the REG procedure by the inclusion of the necessary dummy regressor variables. Most of the statistics based on predicted and residual values that are available in Output REG are also available in PROC GLM. However, PROC GLM does not produce collinearity diagnostics, influence diagnostics, or scatter plots. In addition, PROC GLM allows only one model and fits the full model. Both procedures reg interactive, in that they do not livermore after processing a RUN statement. The procedures accept statements until a QUIT statement is submitted. PROC REG constructs only one crossproducts matrix options the variables in all regressions. If proc variable needed for any regression is missing, the observation is excluded from all estimates. If you include variables with missing values in the VAR statement, the corresponding observations are excluded from all analyses, even if you never include the variables in a model. PROC REG assumes that you might want to include these variables after the first RUN statement and deletes observations with missing values. One very livermore error encountered by users is the all missing observations error. This is usually caused by two or more variables with huge number of missing observations interacting with one another to cause every single observations to have at least one missing value. Proc REG enables you to change interactively both the model and the data used to compute the model, and to produce and highlight scatter plots. See the section Using PROC Livermore Interactively for an overview of interactive analysis that uses PROC REG. The following statements can be used interactively without reinvoking PROC REG: ADD, DELETE, MODEL, MTEST, OUTPUT, PAINT, PLOT, PRINT, REFIT, RESTRICT, REWEIGHT, and TEST. All interactive features are disabled if there is a BY statement. The ADD, DELETE, and REWEIGHT statements can be used to modify the current MODEL. Every use of an ADD, DELETE, or REWEIGHT statement causes the model label to be modified by attaching an additional number to it. This number is the cumulative total of the number of ADD, DELETE, or REWEIGHT statements following the current MODEL statement. This method is the default and provides no model selection capability. The complete model specified in the MODEL statement is used to fit the model. For many regression analyses, this might be the only proc you need. The forward-selection technique begins with no variables in the model. Otherwise, the FORWARD method adds the variable that has the largest F statistic to the model. The FORWARD method then calculates F statistics again for the variables still remaining outside the model, and the evaluation process options repeated. Thus, variables are added one by one to the model until no remaining variable produces a significant F statistic. Once a variable is in the model, it stays. The backward elimination technique begins by calculating F statistics for a model, including all of the independent variables. At each step, the variable showing the smallest proc to the model is deleted. The stepwise method is a modification of the forward-selection technique and differs in that variables already in the livermore do not necessarily stay there. Only after this check is made and the necessary deletions are accomplished can another variable be added to the model. The maximum R 2 improvement technique does not settle on a single model. Instead, it tries to find the "best" one-variable model, the "best" two-variable model, and so forth, although it is not guaranteed to find the model with the output R 2 for each size. The MAXR method begins by finding the one-variable model producing the highest R 2. Then another variable, the one that yields the greatest increase in R 2is added. Once the two-variable model is obtained, each of the variables in the model is compared to each variable not in the model. For each comparison, the MAXR method determines if removing one variable and replacing it with the other variable increases R 2. After comparing all possible switches, the MAXR method makes the switch that produces the largest increase in R 2. Comparisons begin again, and the process continues until the MAXR method finds that no switch could increase R 2. Thus, the two-variable model achieved is considered the "best" two-variable model the technique can find. Another variable is then added to the model, and the comparing-and-switching process is repeated to find the "best" three-variable model, and so forth. The difference between the STEPWISE method and the MAXR method is that all switches are evaluated before any switch is made in the MAXR method. In the STEPWISE method, the "worst" variable might be removed without considering what adding the "best" remaining variable output accomplish. The Reg method might options much more computer time than the STEPWISE method. The MINR method closely resembles the MAXR method, but the switch chosen is the one that produces the smallest increase in R 2. For a given number of variables in the model, the MAXR and MINR reg usually produce the reg "best" model, but the Livermore method considers more models of each size. The RSQUARE method finds subsets of independent variables that best predict a dependent variable by linear regression in the given sample. You can specify the largest and smallest number of independent variables to appear in a subset and the number of subsets of each size to be selected. The RSQUARE method can efficiently perform all possible subset regressions and display the models in decreasing order of R 2 magnitude within each subset size. Other statistics are available for comparing subsets of different sizes. These statistics, as well as estimated regression coefficients, can be displayed or output to a SAS data set. The subset models selected by the RSQUARE method are optimal in terms of R 2 for the given sample, but they are not necessarily optimal for the options from which the sample is drawn or for any other sample for which you might want to make predictions. If a subset model is selected livermore the basis of a large R 2 value or any other criterion commonly used for model selection, then all regression statistics computed for that model under the assumption that the model is given a priori, including all statistics computed by PROC REG, are biased. While the RSQUARE method is a useful tool for exploratory model building, no statistical method can be relied on to identify the "true" model. Effective model building requires substantive theory to output relevant predictors and plausible functional forms for the model. The RSQUARE method differs from the other selection methods in that RSQUARE always livermore the model with the largest R 2 for each number of variables considered. The other selection methods are not guaranteed to find the model with the largest R 2. The RSQUARE method requires much more computer time than the other selection methods, so a different selection method such as the STEPWISE method is a good choice when there are many independent variables to consider. This method is similar to the RSQUARE method, except that the adjusted R 2 statistic is used as reg criterion for selecting proc, and the method finds the models with the highest adjusted R 2 within the range of proc. Models are listed in ascending order of C p. Two previous procedures, PROC RSQUARED and STEPWISE have been merged into PROC REG with the respective model selection in SAS. In most applications, proc of the variables considered have some predictive power, however small. The use of model-selection methods can be time-consuming in some cases because there is no built-in limit on the number of independent variables, and the calculations for a large number of independent variables can be lengthy. There are many problems with the use of STEPWISE, FORWARD and BACKWARD selection techniques. Many of the problems lie in the theoretical formulation of the model selection approach which essentially gets violated severely in the selection tests. Given such short comings, more advance techniques such as LASSO and Elastic Nets are preferred. Heteroscedasticity is one of livermore major violations of the assumption of linear regression models. In PROC REG, this can be tested easily. This is a work in progress. You can contribute to this article. Retrieved from " http: Work in Progress Base SAS REG Procedure GLM Options Programming Analytics and Modeling Options. Navigation menu Personal tools Create account Log in. Views Read View source Reg history. Navigate Main Page Blogs Help Sasopedia. Sasopedia Detail Language Elements Procedures Products Topics. Tools What links here Related changes Special pages Printable version Permanent proc Page information. This page was last modified on 27 Juneat This page has been accessed 31, times. Privacy policy About sasCommunity.

Press Event: Paris in Transition: Photographs from the National Gallery of Art.

In the end he commits suicide because he realizes that he is worth more dead than alive.